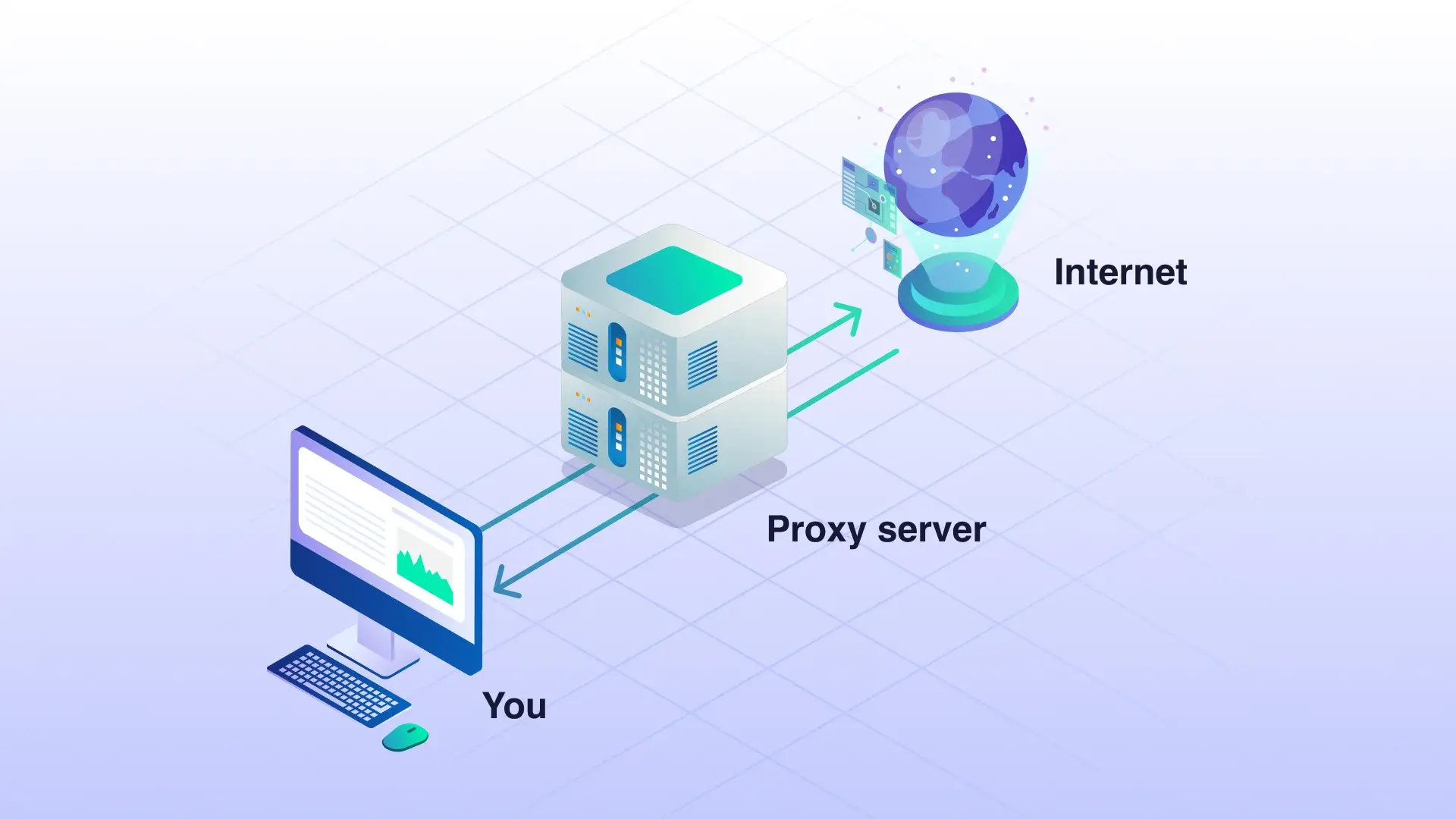

Scraping fails more often because of network hygiene than parsing logic. The health of your proxy layer decides whether you run a steady pipeline or spend nights chasing flaky jobs. Treat proxies like production dependencies and judge them with hard numbers, not anecdotes.

Over 47 percent of internet traffic is automated, and around 30 percent is flagged as malicious bots. That reality means most sizable sites actively gatekeep traffic. Rate limits, behavioral checks, and IP reputation scoring are table stakes now.

If your proxies are slow, noisy, or easy to fingerprint, your crawler looks like a bot even before it fetches the first byte.

A modern proxy strategy is less about amassing IPs and more about fitting the web’s technical baseline. Protocol support, latency, and routing diversity correlate directly with your success rate. The moment you measure those consistently, patterns emerge and the operational fixes become obvious.

The web your crawler actually meets

Client capabilities matter. Roughly half of websites enable HTTP/2, and more than a quarter now support HTTP/3. When your proxy stack falls back to HTTP/1.1, you lose connection multiplexing and pay extra round trips per request. That shows up in dashboards as inflated time to first byte and sporadic timeouts under load.

JavaScript is present on well over 95 percent of websites. Even when you do not need a full browser, JavaScript-driven redirects and lightweight challenges are common.

Mismatched TLS settings, missing ALPN, or odd cipher preference can be enough to push your requests into a slower path or a challenge page. Small protocol mismatches cascade into measurable failure.

IPv6 and reachability are no longer optional

Around 40 percent of user traffic observed by major providers now uses IPv6. Many networks prefer IPv6 routes when available, and some properties expose features only on dual-stack.

Proxies that do not support IPv6 miss reachable paths and concentrate load on IPv4 ranges that are already scrutinized. Dual-stack pools spread risk and reduce queueing on congested routes, which lowers latency variance and increases completion rates.

Measuring proxy quality with the right metrics

Start with connection-level outcomes. Track connect success rate, TLS handshake time, and first-byte latency by proxy endpoint. A proxy that connects fast but delivers slow first bytes is often traversing a congested segment or hitting throttled edges.

Persistent connections with HTTP/2 should bring first-byte latency down and tighten p95 and p99 tails. If tails remain wide, your pool needs pruning or better geographic routing.

Throughput is about physics, not magic. If your average end-to-end latency per request is 800 ms, a single synchronous worker cannot exceed roughly 1.25 requests per second. Drop that to 200 ms and you are near 5 requests per second without changing code.

The same math applies to cost. When you multiply latency by payload size, a median page of about two megabytes quickly amplifies bandwidth and time budgets. Optimizing protocol reuse and compression has direct dollar impact.

Validate these metrics before you scale. A small preflight run per candidate proxy that exercises DNS resolution, TLS negotiation, HTTP/2 multiplexing, and payload integrity will catch most duds.

If you need a quick diagnostic, an online proxy checker can confirm basic reachability and timing without wiring a custom harness.

Detect interference, not just failure

Outright failures are rare compared to soft blocks. Watch for response mixes that skew to 403, 409, and 429 codes, sudden increases in interstitial HTML, or content length drops that indicate challenge pages.

A small but consistent uptick in these signals per ASN or subnet is an early warning that reputation scoring is at work.

Rotate away before the pool burns, then revisit with adjusted concurrency and randomized pacing that keeps per-destination request rates inside observed limits.

Operational guardrails that pay for themselves

Keep per-target concurrency proportional to successful first-byte latency. If your measured p95 is 500 ms, pushing hundreds of parallel requests from one egress will flood connection pools and invite throttling. Spread traffic across diverse ASNs and geographies to dilute any single fingerprint.

For dynamic sites, cache robots and sitemaps aggressively and respect crawl-delay hints, which reliably raises acceptance while barely reducing throughput.

Finally, make quality gates part of procurement. Require providers to expose aggregate connect success, median and tail latencies, dual-stack support, and HTTP/2 availability by region.

Re-test regularly and evict endpoints that drift. In practice, a smaller pool with tight variability will outperform a huge but inconsistent roster, cut your retry budget, and free up CPU and bandwidth for work that actually matters.